Everything you always wanted to know about Process Mining, but were afraid to ask.

Companies use information systems to enhance the processing of their business transactions. Enterprise resource planning (ERP) and workflow management systems (WFMS) are the predominant information system types that are used to support and automate the execution of business processes. Business processes like procurement, operations, logistics, sales and human resources can hardly be imagined without the integration of information systems that support and monitor relevant activities in modern companies. The increasing integration of information systems does not only provide the means to increase effectiveness and efficiency. It also opens up new possibilities of data access and analysis. When information systems are used for supporting and automating the processing of business transactions they generate data. This data can for example be used for improving business decisions.

The application of techniques and tools for generating information from digital data is called business intelligence (BI). Prominent approaches which make use of BI include online analytical processing (OLAP) and data mining. OLAP tools allow for the analysis of multidimensional data using operators like roll-up and drill-down, slice and dice or split and merge (Source: Wikipedia (OLAP cube)):

- Dice: The dice operation produces a subcube by allowing the analyst to pick specific values of multiple dimensions.

- Drill Down/Up allows the user to navigate among levels of data ranging from the most summarized (up) to the most detailed (down).

- Roll-up: A roll-up involves summarizing the data along a dimension.

- Split: Enables to cut a cube into pieces

- Merge: Combines several parts of cubes

Data mining is primarily used for discovering patterns in large data sets. But the availability of data is not only a blessing as a new source of information but it can also become a curse. The phenomena of information overflow, data explosion and big data illustrate several problems that arise from the availability of enormous amounts of data. Humans are only able to handle a certain amount of information in a given time frame. When more and more data is available how can it actually be used in a meaningful manner without overstraining the human recipient?

Data mining is the analysis of data for finding relationships and patterns. The patterns are an abstraction of the analyzed data. Abstraction reduces complexity and makes information available for the recipient. The aim of process mining is the extraction of information about business processes. Process mining encompasses “techniques, tools and methods to discover, monitor and improve real processes by extracting knowledge from event logs”. The data that is generated during the execution of business processes in information systems is used for reconstructing process models. These models are useful for analyzing and optimizing processes. Process mining is an innovative approach and builds a bridge between data mining and business process management.

Process mining evolved in the context of analyzing software engineering processes by Cook and Wolf in the late 1990s. Agrawal and Gunopulos and Herbst and Karagiannis introduced process mining to the context of workflow management. Major contributions to the field have been added during the last decade by van der Aalst and other research colleagues by developing mature mining algorithms and addressing a variety of topic related challenges. This has led to a well-developed set of methods and tools that are available for scientists and practitioners.

Process Models and Event Logs

The aim of process mining is the construction of process models based on available logging data. In the context of information system science, a model is an immaterial representation of its real world counterpart used for a specific purpose. Models can be used to reduce complexity by representing characteristics of interest and by omitting other characteristics. A process model is a graphical representation of a business process that describes the dependencies between activities that need to be executed collectively for realizing a specific business objective. It consists of a set of activity models and constraints between them.

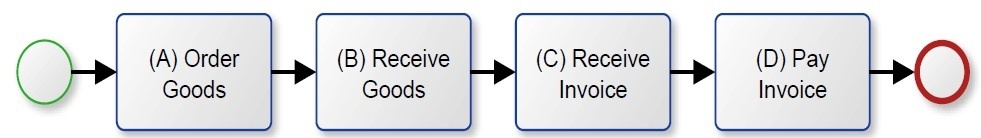

Process models can be represented in different process modeling languages for example using the Business Process Model and Notation (BPMN), Event Driven Process Chains (EPC) or Petri Nets. Petri Nets are the dominant modeling language in the field of process mining. While the formal expressiveness of the Petri Net language is strong, it is less suitable for addressees that are not familiar with its syntax and semantics. BPMN provides more intuitive semantics that are easier to understand for recipients that do not possess a theoretical background in informatics. We are therefore going to rely on BPMN models for illustrative purposes in this article. Figure 1 shows a business process model of a simple purchasing process. It starts with the ordering of goods. At some point in time, the ordered goods get delivered. After the goods have been received, the supplier issues an invoice which is finally paid by the company that ordered the goods.

The illustrated process model was created manually. So we do not know if the model actually reflects reality. There might, for example, be cases in which invoices are paid before the goods and invoices have been delivered. For ordered services, there might even be no such step as a recorded delivery. The question arises: How can we get reliable information about whether and how the business process has really been executed?

The approach used in process mining for answering this question is based on the exploitation of data stored in information systems that is created during the processing of business transactions. An information system stores data in log files or database tables when processing transactions. In the case of issuing an order, data about the type and quantity of ordered goods, preferred suppliers, time of ordering etc. gets recorded. The stored data can be extracted from the information system and be made available in so-called event logs. They constitute the data that form the basis for process mining algorithms.

| Case ID | Event ID | Timestamp | Activity |

| 1 | 1000 | 01/01/2017 | Order Goods |

| 1001 | 10/01/2017 | Receive Goods | |

| 1002 | 13/01/2017 | Receive Invoice | |

| 1003 | 20/01/2017 | Pay Invoice | |

| 2 | 1004 | 02/01/2017 | Order Goods |

| 1005 | 01/01/2017 | Receive Goods | |

| … | … | … |

Table 1: Event Log Structure

An event log is basically a table. It contains all recorded events that relate to executed business activities. Each event is mapped to a case. A process model is an abstraction of the real world execution of a business process. A single execution of a business process is called process instance. They are reflected in the event log as a set of events that are mapped to the same case. The sequence of recorded events in a case is called trace. The model that describes the execution of a single process instance is called process instance model. A process model abstracts from the single behavior of process instances and provides a model that reflects the behavior of all instances that belong to the same process. Cases and events are characterized by classifiers and attributes. Classifiers ensure the distinctness of cases and events by mapping unique names to each case and event. Attributes store additional information that can be used for analysis purposes. An example of an event log is given in Table 1.

Mining Procedure

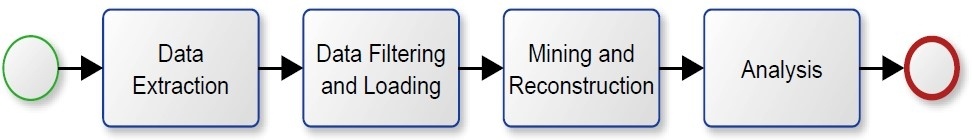

Figure 2 provides an overview of the different process mining activities. Before being able to apply any process mining technique, it is necessary to have access to the data. It needs to be extracted from the relevant information systems. This step is far from trivial. Depending on the type of source system, the relevant data can be distributed over different database tables. Data entries might need to be composed in a meaningful manner for the extraction. Another obstacle is the amount of data.

Depending on the objective of the process mining up to millions of data entries might need to be extracted which requires efficient extraction methods. A further important aspect is confidentiality. Extracted data might include personalized information and, depending on legal requirements, anonymization or pseudonymization might be necessary.

Before the extracted event log can be used it needs to be filtered and loaded into the process mining software. There are different reasons why filtering is necessary. Information systems are not free of errors. Data may be recorded that does not reflect real activities. Errors can result from malfunctioning programs but also from user disruption or hardware failures that lead to erroneous records in the event log. Other errors can occur without incorrect processing. A specific process is normally analyzed for a certain time frame. When the data is extracted from the source system process instances can get truncated that were executed over the boundaries of the selected time frame. They need to be deleted from the event log or extracted completely. Otherwise they lead to erroneous results in the reconstructed process models. Event logs commonly do not exclusively contain data for a single process. Filtering is necessary to curtail the event log so that it only contains events that belong to the scrutinized process. Such filtering needs to be conducted carefully because it can lead to truncated process instances as well. A common criterion is the selection of activities that are known to belong to the same process. Data filtering and loading is commonly supported by software tools and performed in a single step. But it can also be done separately.

Once the data is loaded into the process mining software, the actual mining and reconstruction of the process model can take place. The mining includes the discovery of relationships in the event log whereas the reconstruction produces a process model as a graphical representation. The mining and reconstruction are commonly provided by the same software tool in a single step.

When the process models are mined and reconstructed they can be used for the intended purpose. We summarize this step under the term analysis. A fundamental goal of process mining is the discovery of formerly unknown processes. In this case, the reconstruction is the end-in-itself. But it is not everything. The analysis may also aim to achieve additional objectives like identifying opportunities for process optimization, organizational aspects, or conformance and compliance analysis.

In the next series you can read more about Process Mining Algorithms, before we come to the different types of Process Mining in the 3rd Blog Post of this series.

This article appeared as a long version: Gehrke, N., Werner, M.: Process Mining, in: WISU – Wirtschaft und Studium, Juli edition 2013, pp. 934 – 943